How to Scrape Yelp for Free in 2024 [No-Code]

But why'd I need to scrape Yelp in the first place? What can I do with the Yelp dataset?

Why Scrape Yelp?

Scraping Yelp has countless benefits for entrepreneurs, marketers, researchers, and businesses across various industries. Here are some key use cases:

- Market Research: By analyzing this data you can understand local business landscapes. You can know a lot about your competitors and industry trends.

- Competitor Analysis: By scraping Yelp, you can collect crucial information about your competitors. This includes their business details, customer reviews, ratings, and overall performance.

- Lead Generation: Scraping Yelp listings helps you identify b2b leads and prospects in your target market.

- Data-driven Decision Making: Scraping Yelp data provides you large-scale, structured information for data-driven decisions in strategic planning, and marketing.

OK I get it. Scraping Yelp is highly beneficial for me. But it can land me in some serious legal trouble. What if it’s illegal to scrape yelp?

Is web scraping Yelp legal?

So does it mean scraping Yelp is illegal? Well not exactly.

Or as some dudes on reddit say:

But why f##k em if we could use the official API? Let’s find out.

Is there any official Yelp API for data extraction?

If you want sample data, this option may be suitable for you. But it’s limited. You can access only 150k business listings from 11 metro cities only. So for commercial use cases, this one’s a NO NO.

Then why aren’t we using it? Well Fusion API has limitations like:

- Limited daily requests

- Limited regions

- Won’t return listings with no reviews

Firstly, the API allows only 500 requests per day. This isn’t enough for business use and Yelp doesn't allow commercial use of Fusion API either.

Lastly, it doesn’t show many listings that may appear in yelp search results. Any listing that doesn’t have a review is not fetched by this API.

So what other options do we have? Well we can either code a web scraper or use a ready-made script from Github. But this isn’t reliable as Yelp can ban your IP.

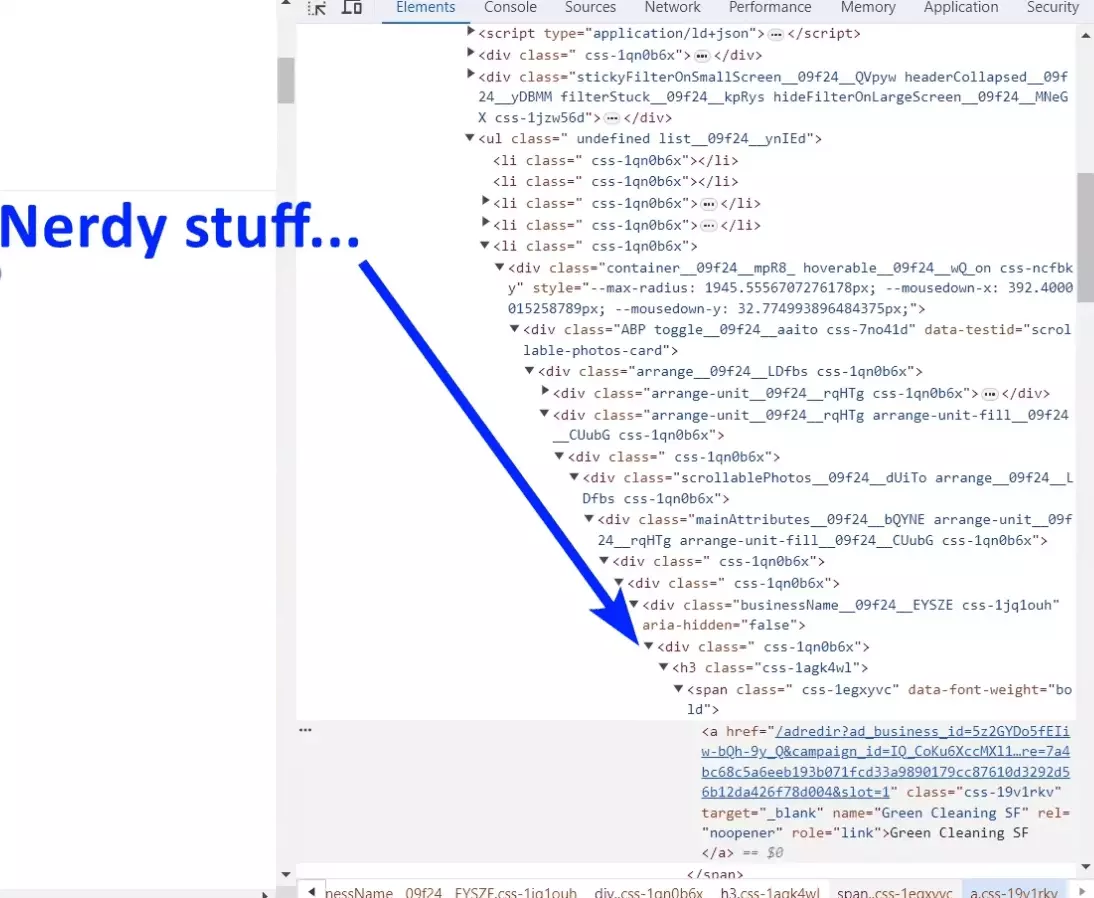

But not everyone is a nerd. Not everyone can read HTML, CSS, JavaScript gibberish and code a perfect web scraper. For context, look at this Yelp results web page:

In this tutorial, I’ll use Lobstr.io, the best Yelp scraper to extract business listings without coding. Let’s roll.

How to scrape Yelp without coding

Key Features

- 13 Data Attributes: You can scrape 13 key data attributes from any Yelp listing. This includes Yelp business name, reviews count, rating score, phone number, and much more.

- 30 Results per minute: This boi fetches 30 business listings per minute for you. Being a cloud-based crawler, your internet speed neither slows down nor interrupts the extraction.

- Schedule Feature: Scrape repeatedly and automatically with the schedule feature. You can set the scraper to run every hour, day, week, or month.

- Developer-ready API: Hey nerds! This boi has got you covered too. Use the developer-ready API and integrate Yelp Search Export to your own apps.

- 3rd Party Integrations: Download your data as a .csv file or export it directly to Google Sheets, Amazon S3, Webhook, or SFTP. This dude seamlessly integrates to all these big bois.

So lobstr.io is cool, but how much does it cost?

Pricing

Lobstr.io offers a really flexible and transparent price range. You can opt for:

- Free Plan: It’s free forever, extract up to 13,000+ results per month

- Premium Plan: €50 per month, costing around €0.3 per 1000 listings

- Business Plan: €250 per month, costing around €0.19 per 1000 listings

- Enterprise Plan: €500 per month, costing around €0.15 per 1000 listings

OK dope, now let’s learn how to scrape Yelp for free with Yelp Search Export.

Scraping Yelp Listings with Lobstr.io: A Step-by-Step Guide

There’s nothing nerdy about Lobstr.io. We’ll scrape Yelp business listings in 6 simple steps.

- Get Yelp search URL

- Create Squid

- Add tasks

- Finetune settings

- Launch

- Enjoy

I’ll be scraping all the Restaurants in San Francisco listed on Yelp. Let’s go 💨

Step 1 - Get the Yelp search URL

First and foremost, we’ll get the URL of the Yelp search results page. Go to Yelp, enter location and keyword. Then copy the URL from the address bar.

Here we go, our URL is:

We can also split the job in multiple URLs to get more precise results. Let’s filter the search results by neighborhoods. I’m going to target these 4 neighborhoods:

- Alamo Square

- Anza Vista

- Fisherman's Wharf

- Castro

Let’s apply the filter and copy the URLs. Here we go.

- https://www.yelp.com/search?finddesc=Restaurants&findloc=San+Francisco%2C+CA&l=p%3ACA%3ASanFrancisco%3A%3AAlamoSquare

- https://www.yelp.com/search?finddesc=Restaurants&findloc=San+Francisco%2C+CA&l=p%3ACA%3ASanFrancisco%3A%3AAnzaVista

- https://www.yelp.com/search?finddesc=Restaurants&findloc=San+Francisco%2C+CA&l=p%3ACA%3ASanFrancisco%3A%3AFisherman%27sWharf

- https://www.yelp.com/search?finddesc=Restaurants&findloc=San+Francisco%2C+CA&l=p%3ACA%3ASanFrancisco%3A%3A%5BCastro%2CGlenPark%5D

Now let’s move to step 2.

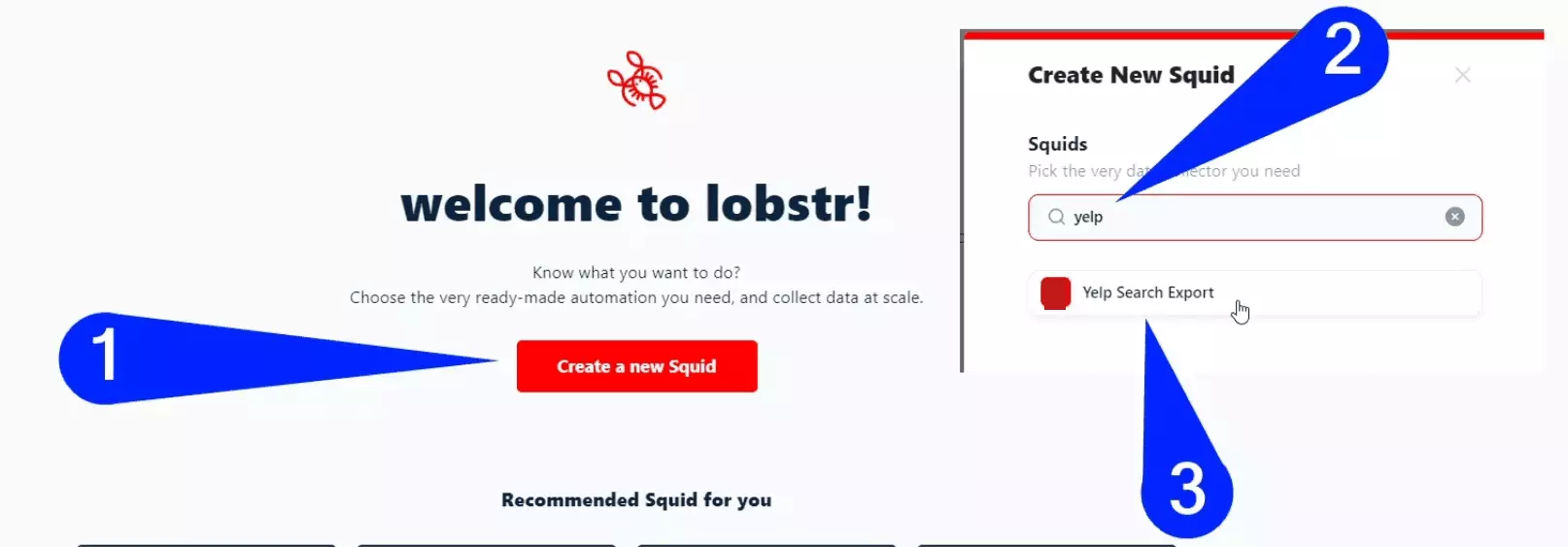

Step 2 - Create the Squid

To create a squid, you’ll need a Lobstr.io account. Don’t have one? It’s free to create an account. You don’t need to enter any credit card information. Go sign up now.

Once you’re in, creating a squid is easy. Click the Create New Squid, and type “yelp” in the search bar. Click the “Yelp Search Export” squid and you’re ready to configure it.

This will take you to the tasks area. Let’s feed the URLs to our scraper.

Step 3 - Add tasks

Great. All 4 URLs imported. Now let’s explore the settings.

Step 4 - Adjust behavior

In basic settings, you can choose maximum results to scrape per URL. By default it’s 240. If you want to scrape less than 240, this option is for you.

Next is the “when to end the run” option. You can choose whether you want to end the run when all your daily credits are consumed or when all the tasks are completed.

If you select the second option, your squid will pause once all your daily credits are consumed. The task will resume the next day where it left off.

You can use the Advanced Settings to set the concurrency i.e. number of bots launched simultaneously on your squid. The formula is simple: more concurrency = faster scraping.

Free plan allows you to launch only 1 bot. You can upgrade to premium or business plans to add more bots.

Toggling Unique Results will remove duplicates from the data and No Line Breaks will remove line breaks from the text fields, making it easy to export to Excel.

Notifications

You can choose to receive notifications when a task is successfully completed or when the squid encounters an error. Save your preference and move to the final step.

Step 5 - Launch

But what if I want to scrape regularly? A cool way to do it is by automating the scraper using the schedule feature. You can schedule the squid to run Daily, Weekly, or Monthly.

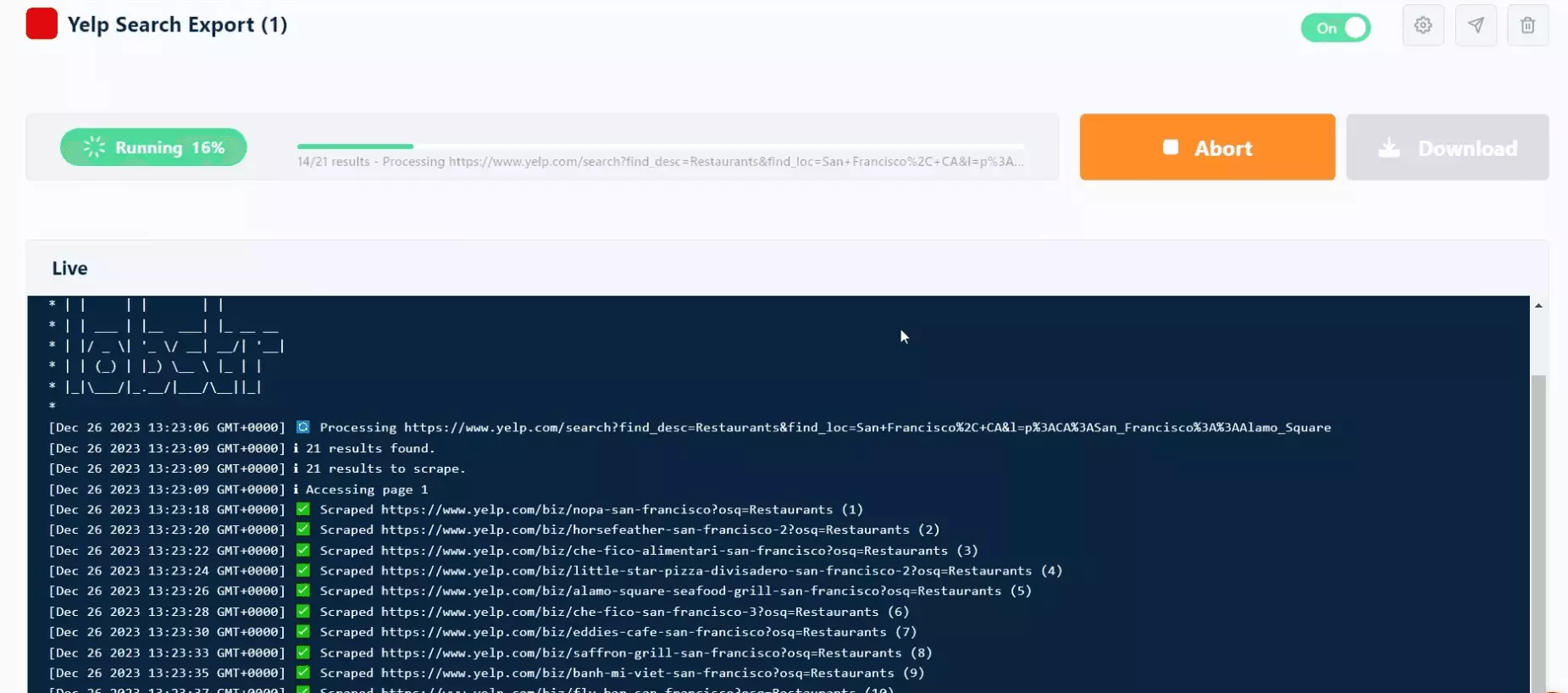

After choosing your launch preferences, you’ll be redirected to the console. This is where you can see extraction progress in real-time. You don’t have to keep this Window open.

Close the window or even the web browser, check back later for results. You’ll receive an email when the job is finished. You can download the results as a .csv file.

But I prefer viewing results in Google sheets. Oh, did I tell you how to use the delivery option? Almost forgot 😞. As mentioned earlier, you can export results directly to 3rd party services.

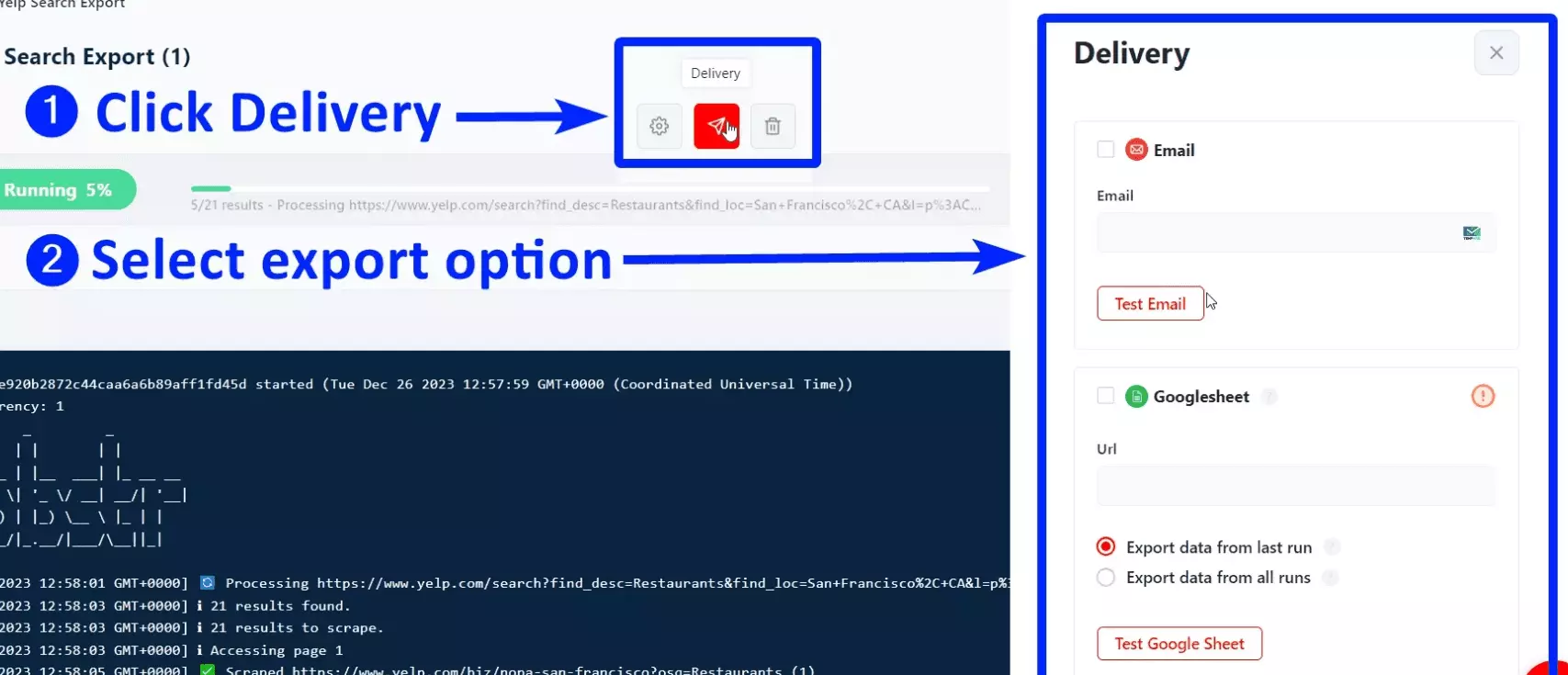

Delivery Settings

To configure your export, click the delivery button and select your desired delivery method. Don’t forget to tick the checkbox, to make sure all the data exports to the selected service.

Step 6 - Enjoy

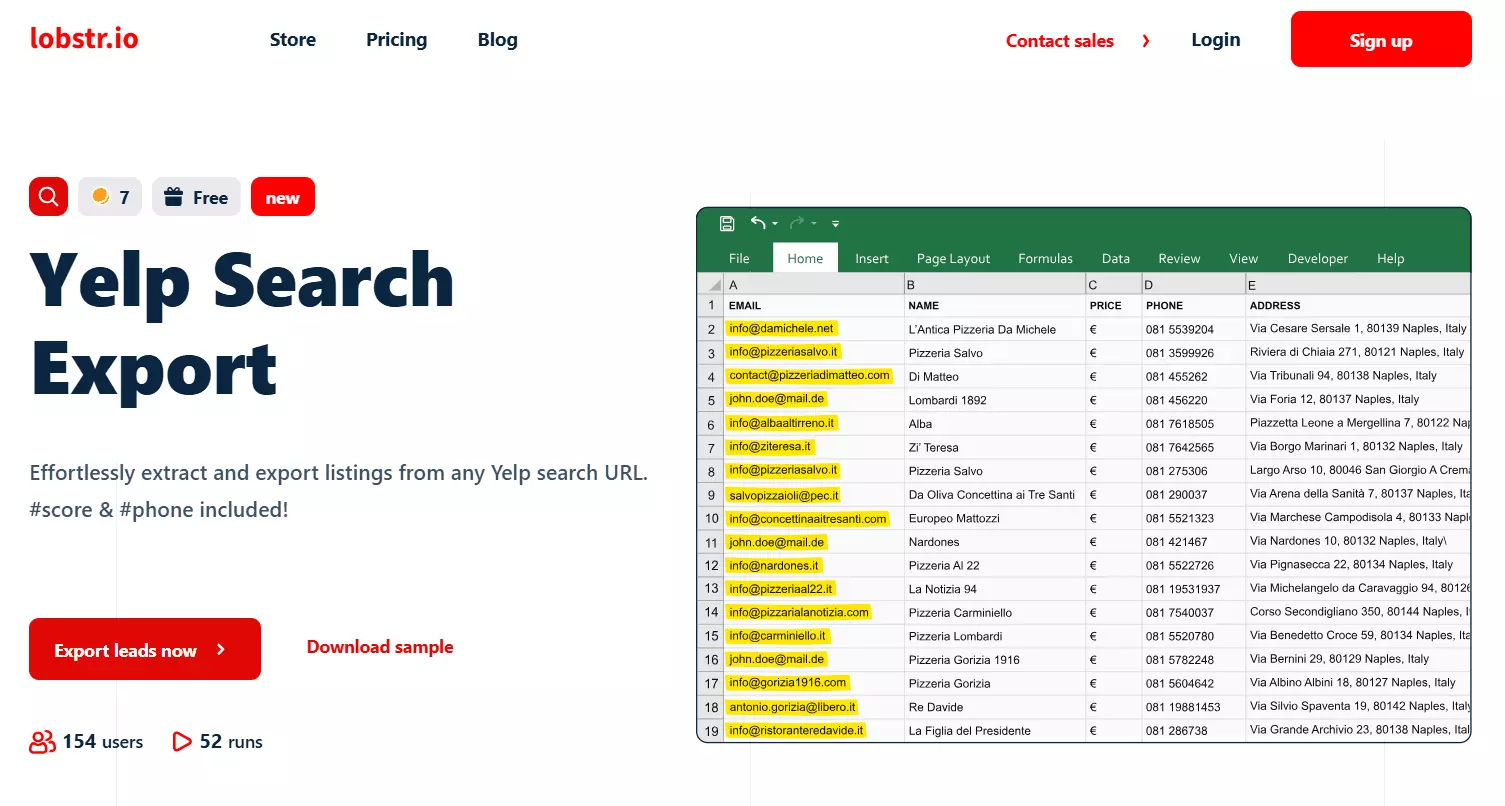

So we extracted 200+ results from 4 different neighborhoods in San Francisco. Our boi brought us all the business data including contact information.

🥳

You can view the downloaded data in Excel or any other spreadsheet application. Or convert it to JSON or any other format you like.

Limitations

Yelp only shows 240 results per search query. So this scraper is also limited to 240 results per URL.You can’t scrape more than 240 results from a single URL.

FAQs

How to bypass the 240 max results limit?

You can split the job into multiple URLs. Just like I did by applying the neighborhood filter. You can use other filters as well. Enable the unique results filter to remove duplicates from the final output.

Should I use VPN for web scraping?

Lobstr.io has its own proxy network. You don’t need any VPN, deal with captcha, or worry about other security measures to avoid being banned.

Can I get IP banned for web scraping?

No, while using Lobstr.io, your IP is not at risk. Since the scraper runs on cloud with Lobstr’s own proxy network, you’re not at risk of IP banning.

Can I scrape Yelp reviews using this scraper?

Conclusion

That’s it. This was our quick but complete tutorial on scraping Yelp listings without coding. We scraped Yelp without getting banned, and completely for free.

Try Lobstr.io, it’s free. You don’t need to add any credit/debit card information. There’s no limited trial. You can upgrade whenever you want and scrape without getting banned.

Happy Scraping 🦀