How to scrape Vinted products without writing a single line of code

Why scrape Vinted?

Scraping Vinted opens up a world of possibilities, providing valuable insights, opportunities for shoppers, fashion bloggers, market researchers, and sellers alike.

- For shoppers: Easily find the best deals and hidden gems on Vinted by comparing prices and exploring product details effortlessly.

- For fashion bloggers: Stay ahead of fashion trends, analyze pricing patterns, and showcase unique finds to your audience, while discovering and collaborating with influential sellers.

- For market researchers: Gain valuable insights into the online fashion marketplace by analyzing pricing trends, customer preferences, and user-specific data attributes.

- For sellers: Optimize your listings and sales strategy, stay ahead of the competition, and boost sales performance with powerful data analysis capabilities.

How to extract product listings from Vinted without coding

Vinted Products Scraper: Key features

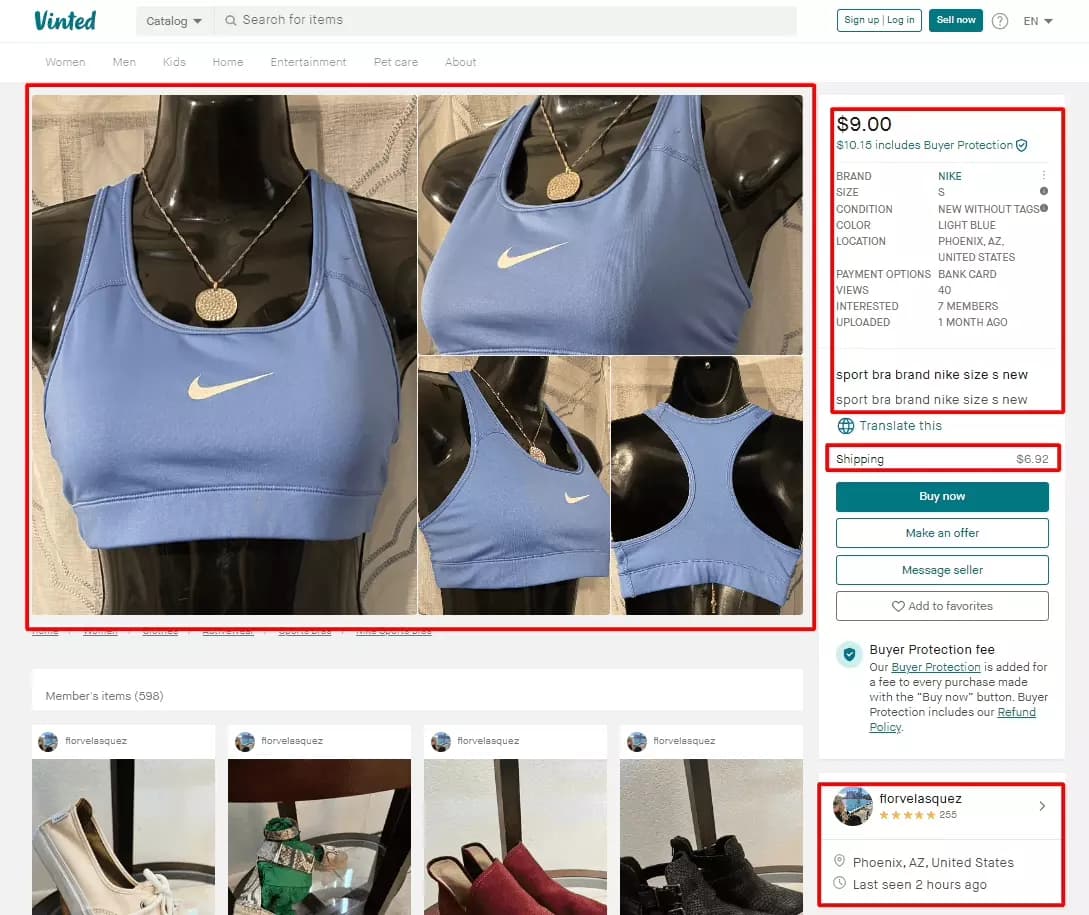

With just a simple search, catalog, or category URL, this bad boy can effortlessly scrape Vinted products in a flash. You can extract 30 unique data attributes from each Vinted product listing, including detailed product specifications and important user details.

But wait, there's more! Our scraper's got you covered on almost all Vinted domains (except .ca), so you can explore listings worldwide. And it's lightning-fast, fetching 150 results per minute, saving you precious time.

Oh, and here's the cherry 🍒 on top: out Vinted products scraper comes with a handy schedule feature. You can set it up for automatic and recurring data collection, making your life even easier. I'll show you just how powerful this feature is later in the article.

Pricing

You can start with our free forever plan, which offers you up to 67,500 results per month for free. If you need more power, consider our premium plan at just €50 per month, giving you up to 810,000 results per month, which works out to an impressive €0.06 per 1000 results. And for the most demanding data needs, our most popular business plan at €250 per month provides an incredible 6,480,000 results per month, costing you less than €0.04 per 1000 results.

Scrape Vinted products in 5 simple steps

Step 1 - Create Squid

To get started, we’ll first create a squid. Login to your Lobstr.io dashboard. If you don’t have an account already, create your free account right away. Once you’re in, click the create new squid button and search “vinted”, select the “Vinted Products Scraper” from the list.

Step 2 - Get URL

Once your squid is created, you'll enter the Add Tasks section. Here we’ll add our URL(s) to scrape Vinted products. Let’s first get the URL. Being a broke sneakerhead, I’ll be scraping Nike trainers (that’s what they call them in the UK), new with tags, in black, white, and gray colors listed on Vinted UK. Let’s go to vinted.co.uk and get the URLs.

Why URLs? Why not a single URL by applying all 3 filters? Vinted by-default shows only 32 pages for each search, or category. This limits our data scraping to 32 pages. To bypass this limitation, we’ll split the job into multiple URLs. We’ll scrape white, black, and gray sneakers separately.

Here we go:

- Black = https://www.vinted.co.uk/catalog?catalog[]=1242&order=newestfirst&statusids[]=6&brandids[]=53&colorids[]=1

- Grey = https://www.vinted.co.uk/catalog?catalog[]=1242&order=newestfirst&statusids[]=6&brandids[]=53&colorids[]=3

- White = https://www.vinted.co.uk/catalog?catalog[]=1242&order=newestfirst&statusids[]=6&brandids[]=53&colorids[]=12

We can add these URLs manually to our scraper but I prefer the easier way. Save the URLs in a text or csv file and upload the file full of tasks. This will save you time and effort.

After uploading the URLs, click save and move to settings.

Step 3 - Settings

Let's start with the first setting called "Max Pages." This setting allows you to decide the maximum number of pages you want to scrape per URL. Since Vinted displays 32 pages for each search result or category, you can choose to scrape up to 32 pages per URL. However, for this tutorial, we'll keep it conservative and only scrape the first 2 pages of each URL.

The next decision is when to end the run. There are two options available. The first one is to end the run when all credits are consumed. This option is handy if you intend to gather results from the same task daily. On the other hand, you can choose to end the run when all tasks are completed. This means that the run will pause once you've used up all your daily credits and will resume again the next day.

If you find yourself running low on credits but have a bunch of tasks to complete, this option allows you to collect all the data at once. In this specific job, there are 3 tasks, and my aim is to collect results from all of them. That's why I'm going to select the second option.

Advanced Settings

Now, for those on the premium plan, there's an exciting feature available in the advanced settings called "Concurrency." This feature allows you to choose the number of bots 🤖 to deploy per run. By deploying multiple bots on a single job, you can significantly speed up the scraping process, making it more efficient.

Toggling "Unique Results" helps you avoid getting duplicate results, while "No Line Breaks" removes any unnecessary line breaks from text fields. Once you've configured all the settings according to your preference, don't forget to click on the "Save" button. Now, we can move on to the next step - setting up notifications.

Notifications

You can choose to receive real-time notifications, keeping you updated on the status of the job. There are two types of notifications you can select: one for successful completion and another for any errors encountered by the scraper. Just pick your preferred notification option and click "Save" to proceed to the final step.

Step 4 - Schedule and Launch

In the final step, you have the freedom to choose whether you want to kickstart the run manually or schedule it. We've got this awesome schedule feature that lets you automate the scraper to run at regular intervals, like every X minutes or specific times, be it hourly, daily, weekly, or even monthly.

So, let's get down to business. We'll schedule our scraper to do its thing every 5 minutes and neatly store all that precious data directly in a Google Spreadsheet. No more manual downloads required. It's going to be super convenient!

Google Sheets setup to export data from Lobstr

To make this happen, follow these simple steps:

- Go to "How do you want to launch?" section and select "Repeatedly." Now, you can choose the frequency, so go ahead and pick "every 5 minutes." Your timezone will be set automatically based on your region, but don't worry, you can always change it to your liking. Once you're done, click "Save & Exit," and let's move on to the next step. \

- Oh, we're not done yet! Now it's time to add that Google Spreadsheet magic. But before that, we need to create a new spreadsheet. So, head over to sheet.new and create one with a name that suits your fancy. Once it's all set up, click on "Share." \

You'll see "General Access" options, and you want to select "Anyone with the link." But here comes the important part: change the role from "Viewer" to "Editor." Now, you're ready to copy the URL of the spreadsheet.

- Back in your Lobstr console, find the delivery section and paste that precious copied URL into the Google Spreadsheet input field.

Hit that "Save" button, and voilà! Your recurring data collection setup is complete. All you need to do now is click "Launch," and let Lobstr work its magic.

With this setup, you can relax and let the scraper take care of business every 5 minutes, smoothly collecting and organizing your data in the designated Google Spreadsheet.

Step 5 - Enjoy

That’s it. After launching the scraper, it’ll start fetching Vinted product listings with 30 data attributes. You can close the window, let our cloud-based scraper gather the data for you.

Once an extraction is completed, your data will be stored in the Google sheet you linked. It’ll keep updating the Google sheet with results from the latest run every 5 minutes. Here’s how the data looks like in the Google sheet.

Automatically post products from Lobstr Vinted scraper to Discord

Instead of paying for a discord bot that is full of bugs, slow, and too nerdy to set up, you can integrate Google Sheet to Discord. As our data automatically updates to Google sheet, we can use Zapier to automatically send a message to a discord server when a new product is extracted.

To get started, sign up to zapier and create a new zap. Or you can use this really cool Discord + Google Sheets automation. Connect your google account and Discord server. Test the zap and you’re good to go.

Conclusion

In a nutshell, Vinted Products Scraper is the ultimate no-code solution for scraping Vinted products hassle-free. It's a user-friendly tool that can easily extract 30 unique data attributes from any Vinted product listing, making it a powerful asset for shoppers, bloggers, market researchers, and sellers alike.

With compatibility across multiple domains (except .ca) and impressive speed, the scraper ensures you get valuable insights without any coding skills. If you're looking to take your Vinted experience to the next level and scrape at scale, consider upgrading to our premium or business plan for uninterrupted data collection.